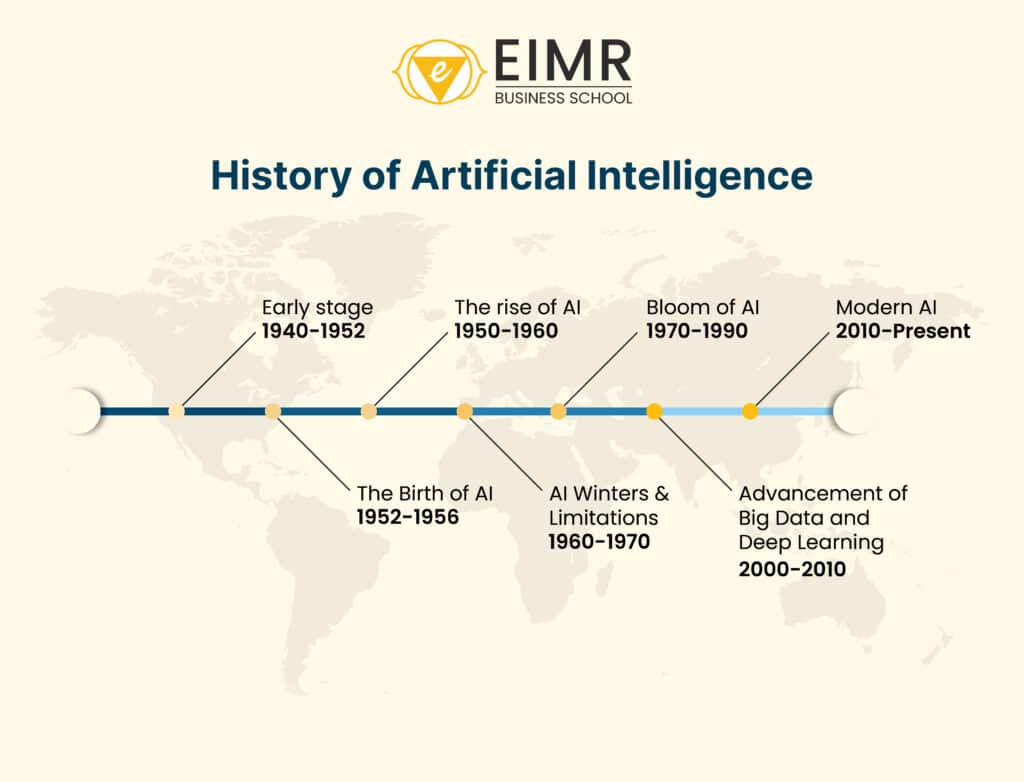

The term ‘Artificial Intelligence’ has been used for thousands of years, with philosophers debating life-and-death questions. In recent years, AI has achieved significant milestones, breakthroughs, and evolving definitions. Let’s delve into the history of AI and its stages of development.

The origins of AI can be traced back to mechanical developments that occurred before the term “robot” was even coined. The groundwork for AI was established through mathematical logic by pioneers like Alan Turing, who introduced the concept of a “universal machine” capable of executing any mathematical operations provided the appropriate algorithm and sufficient time. Turing’s publication “Computing Machinery and Intelligence” raised important questions about the capabilities of machinery and how machines can exhibit human-like intelligence.

The field of AI was formally established during the Dartmouth Conference, where the term “Artificial Intelligence” was first coined. This marked the beginning of AI and introduced it as a field of study that focused on quick research, problem-solving, and symbolic methods.

In the 1950s and 1960s, artificial intelligence saw development with the creation of some of the earliest AI programs. These programs were used to solve mathematical theorems and aimed to mimic human problem-solving. LISP was a dominant programming language used for AI research due to its symbolic reasoning and manipulation. In the mid-60s, early natural language programming was created to simulate conversation, demonstrating the potential of AI to interact with humans.

In later research, scientists encountered significant challenges due to hardware limitations and the complexity of complex problems. AI models struggled with more sophisticated tasks, hindering the scalability of AI programs. As a result, there were many failures, funding cuts, and unmet expectations, ultimately leading to the first “Winter of AI.”

After the AI winter, AI reemerged with the development of “Expert Systems,” which involved training and programming systems to mimic the decision-making abilities of human experts. This era also saw the fusion of machine learning and neural networks, drawing inspiration from the structure of the human brain. This period marked a transition from AI to machine learning and the combination of neural networks. However, AI encountered a second winter in the following years due to the limitations of neural networks and the challenges in scaling them. In the early 1990s, AI experienced a second winter, yet it also witnessed advancements in specific areas such as robotics, computer vision, and natural language processing.

In the early 2000s, the progress of the internet, mobile technology, and big data sparked interest in AI. The development of technologies like cloud computing and the use of graphics processing units (GPUs) has aided in training large-scale neural networks. Deep learning, a subfield of machine learning, involves multilayered neural networks. It incorporates CNNs and RNNs for tasks such as image recognition, speech processing, and natural language understanding. These advancements have made AI more sophisticated and have reduced error rates in image classifications, paving the way for its implementation across various technologies.

Artificial intelligence (AI) is now integrated into our daily lives through various technologies such as virtual assistants, recommendation systems, and autonomous vehicles. This technology has seen significant advancements in natural language processing (NLP), with models like GPT and BERT greatly improving machines’ ability to understand human language. However, the rapid development of AI has raised concerns about ethics, bias, transparency, privacy, and the impact of AI on humans. As a result, scientists, researchers, and government entities are advocating for the ethical development, fairness, and responsible usage of AI.